Artificial Intelligence has become a household word these days. Some are optimistic, some are skeptical and some are worried about it replacing us all. However, it cannot be ignored anymore. It looks like AI is here to stay and what we can now do is to be prepared for the future. Similar to the wheel, machines, calculator and computer for the past generations, AI will be the landmark innovation of our millennial and Gen-Z generations. Let’s experience and embrace it together.

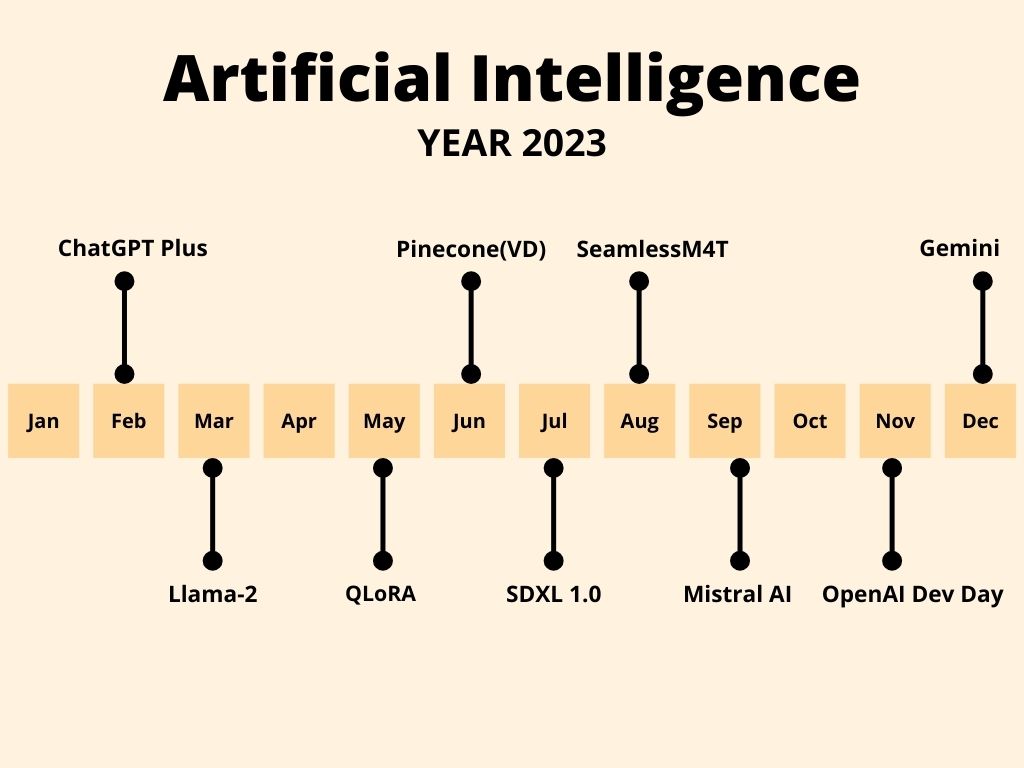

In this blog, we discuss the crucial developments that have happened in the field of AI in 2023. We discuss how the conversational chat tools like ChatGPT and Bard have evolved, the rise of Open Source LLMs, Impact of AI on society and a few predictions for 2024.

Evolution of ChatGPT and Bard

ChatGPT

ChatGPT, as we all know, was released in Nov 30, 2022 and it has come a long way since then. It is built on the GPT series models ranging from GPT-3 to GPT-4-Turbo. Initially, ChatGPT was free for everyone but in Feb 2023, the have released a paid version called ChatGPT Plus that uses the latest GPT model. It has many advantages like Priority Access, Faster Responses and tools/plugins like CodeInterpreter. I have used both the versions and I was slightly underwhelmed with ChatGPT Plus. It was much slower than the free version and the responses were too verbose. However, this could be because they were experiencing a high demand at the time and had to pause ChatGPT Plus signups.

One other crucial part of OpenAI’s offerings is the API. This makes it easier to build LLM apps and integrate AI into any existing system. The API also has many models to choose from. The text-embedding-ada-002 model is used to extract embeddings which are further stored in vector databases that facilitate semantic search. The DALL-E models are used for image generation and Whisper is available for Speech to Text. Recently in the OpenAI Dev day, an Assistants API that lets you perform actions like searching the internet and booking tickets. All these APIs are paid but they are the cheapest on the market.

Over the last year, ChatGPT has also become multi-modal, which means it can now understand and process different types of data – images, audio. We will discuss Multi-Modal Learning and Large Multi-Modal Models(LMMs) in the sections below. You could also now talk to ChatGPT as if you are speaking on the phone. As we speak, the audio version was made available to all the free users as well.

Google’s Bard

Apart from ChatGPT, there is another conversational chatbot – Bard by Google. It was released in March, 2023 and it has been in beta mode since then. Bard was built on top of the LAMBDA models initially and they later moved to PaLM-2. As of today, the latest model Gemini powers Bard. Bard was also multi-modal and you can include images and audio in your prompts. Bard has had mixed responses and many people felt it’s responses were not as good as ChatGPT. However, there are advantages too. Bard is connected to several of the Google apps and could search Google to get relevant links.

ChatGPT, of course, seems to be the clear winner but the debate ChatGPT vs Bard seems to be ongoing.

So, this is all about the closed and proprietary models and products. This led to a lot of criticism of OpenAI as it was initially positioned as a not-for-profit company. They have not released their models or training data. This led to a demand for Open Source Large Language Models(LLMs).

Open Source LLMs

Meta, that owns Facebook, started this trend with the release of Llama-2 in July. This was a significant improvement over LLama-1 as they have trained it with a lot more training data and better self-attention mechanism. There are several versions of these models ranging from 7 billion to 70 billion parameters. The quality of the responses increases with the increase in the number of parameters. The 70B LLama-2 is said to perform better than GPT-3.5 in many tasks. Meta has also released specific versions such as Code LLama for code generation.

The other famous open source LLMs are Falcon 180B, Vicuna 13B, Mistral 7B. Unlike Llama-2 these models are not aligned for safety and should be used with caution. You can see the different open source models on the HuggingFace leaderboard and every week a new model tops the chart. This shows how rapidly the open source LLM movement is evolving. The main development that led to this rise was the ease of fine-tuning LLMs with billions of parameters without consuming a ton of resources. The concepts LoRA(Low Rank Adaptation) and QLoRa(Quantized Low Rank Adaptation) have made the fine tuning models very efficient.

Though the open source models are almost as good as the closed ones, they are still expensive to deploy and maintain. The cloud providers AWS, Azure have options to deploy models like Llama-2 but you have to pay for hourly usage. Relatively speaking, OpenAI’s API is much cheaper without a maintenance overhead. Let us hope this changes in 2024 and it becomes easier to get open source models into production.

Multi-modal Artificial Intelligence

Until now, we have discussed the development of LLMs that focus only on the text. But, there have been several breakthroughs for other modes such as images, audio, video in 2023. For images, the models like Stable Diffusion, DALL-E series and MidJourney that generate amazing images based on text prompts were released. However, the generated images are clearly distinguishable from real pictures. Sometimes, for people in the images, there have been disfigurations such as fingers, nose etc.,. For video, Pika Labs came up with a tool that generates videos based on text prompts.

For audio, OpenAI’s Whisper model was the best Speech to Text model in the market. In August 2023, Meta released SeamlessM4T that transcribes speech and does speech to speech translation. If you observer all these models take fixed inputs and outputs. For example, in the case of image generation tools, there is a fixed pair of input and output. However, recently, we see a surge in models that are flexible enough to handle different types of inputs. This is important because that is how we humans perceive the world around us and these models are trying to encapsulate human experience. Some crucial models in this area are GPT-4 Vision, Microsoft’s KOSMOS, Llava-1.5 etc.,

Impact of Artificial Intelligence on Society

AI has been shown to have a significant improvement in productivity for knowledge based workers. There is no denying that AI has entered many areas of our lives. Almost every company wants to have AI integration in their pipeline. In software development, AI tools give code suggestions, fix bugs and write automated tests. Artificial Intelligence is used for use cases like Fraud Detection and Credit Risk Assessment in financial applications. In Healthcare, AI is used to give personalized medical advice, analyzing radiology reports and predicting diseases.

Job Displacement

All these positives are great but we do need to address the elephant in the room. If an AI system becomes so powerful and almost human like what is the need for humans? After all, an AI is available 24 x 7 without getting tired. There is already an AI influencer on Instagram who earns $11,000 per month. Social media profiles are filled with AI generated posts. Customer Service jobs are slowly being automated and we already discuss our issues with chatbots than with a human.

The million dollar question “Will AI replace humans?”. Eventually, yes. I do not see it happening in the next 5 years though. In my personal experience, it takes time for technology to be adopted by most people but with the current pace, in a decade, we might be living in a very different world. There are talks of UBI(Universal Basic Income) but there has been no research on if this is feasible. However, there is a silver lining to this scenario. The World Economic Forum has announced a few jobs that AI cannot replace and Jobs that AI will add. Additionally, we can work on developing soft skills and specific domain experience to have an edge over AI.

Future Predictions(2024)

In 2024, may be we will finally get to know the actual reason behind Sam Altman’s firing and rehiring as the CEO of OpenAI. Jokes aside, there are many exciting developments to look forward to in the technical front.

- Text to audio and Text to Video models will get more advanced and current bottlenecks will be addressed.

- Google’s Gemini, released on December 8, sets a precedent for 2024 – I predict there will be many more attempts at building Multi-modal AI.

- In 2023, there has been a scramble for GPUs and NVIDIA’s stock shot up due to the sudden demand as everybody were trying to build their own models. This led to the discussion of Quantum Machine Learning that would fasten the development.

- Artificial Intelligence model development and maintenance takes up a lot of resources and leads to increased carbon footprint. This can be avoided using efficient techniques to train and fine tune models. This is crucial as it is high time we move towards a sustainable world. 2024 will be the year of Sustainable AI.

We will go into much further depth into these topics in the upcoming posts. Stay tuned. Do you want to understand how ChatGPT works? Learn more about it here. For other AI related posts, go here. Want to write for us and earn money? You can find more details here.