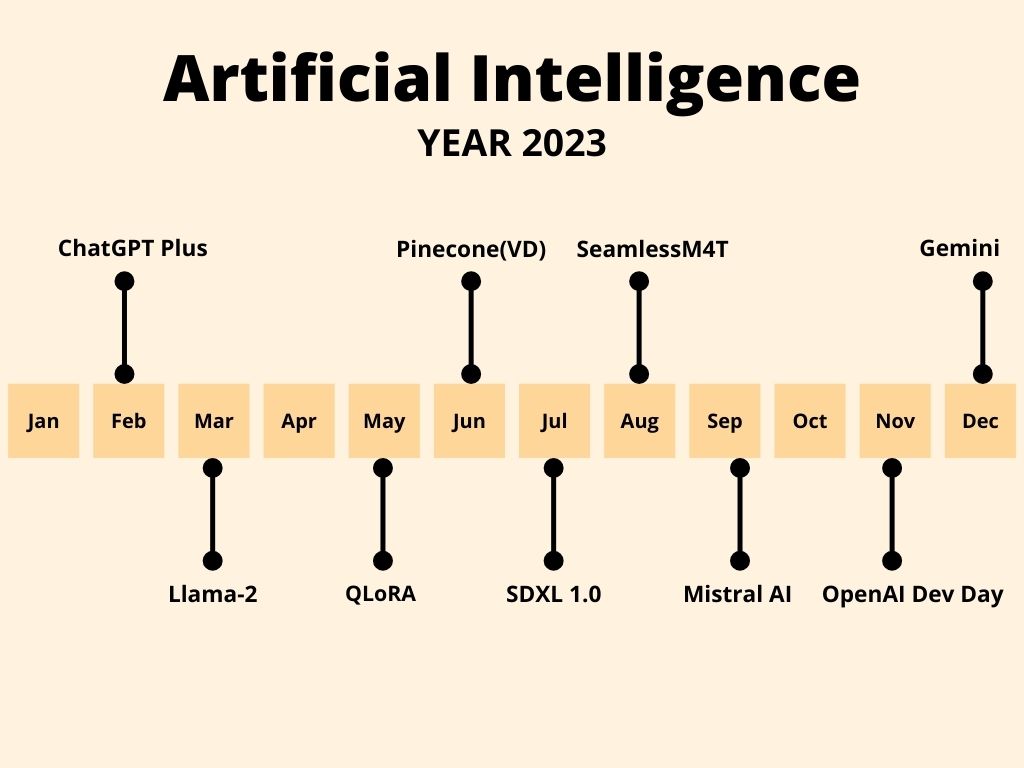

Artificial Intelligence in 2023

Artificial Intelligence has become a household word these days. Some are optimistic, some are skeptical and some are worried about it replacing us all. However, it cannot be ignored anymore. It looks like AI is here to stay and what we can now do is to be prepared for the future. Similar to the wheel, … Read more